The service support system provides the following:

- computing service,

- storage services,

- GRID systems,

- data transfer services ,

- information service (monitoring services: servers, storage, data transfer, information sites).

The Slurm workload manager is used to launch data processing tasks. The grid environment uses Advanced Resource Connector (ARC), a grid computing middleware. It provides a common interface for transferring computing tasks to various distributed computing systems and can include grid infrastructures of varying size and complexity.

The dCache and EOS systems are used as the main data storage systems. The afs is used for user home directories, cvmfs storage systems is used to store project software (cvmfs is a system for distributed access and organization of software versions for collaborations and user groups, cvmfs is used to store project software).

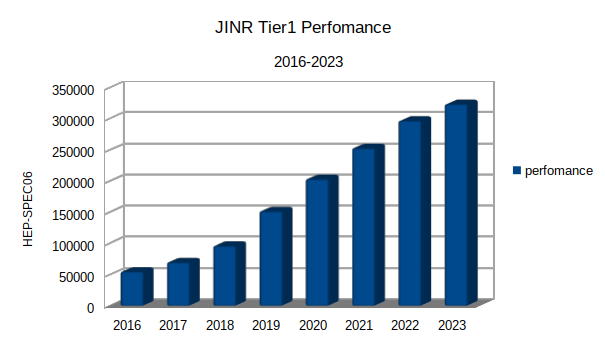

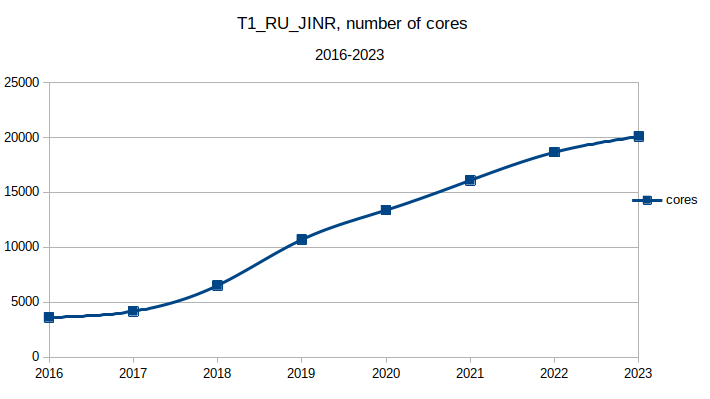

Tier1 farm configuration (T1_RU_JINR):

The CMS experiment data processing jobs are launched by 16 core pilots and all computing resources are available to them.

Computing farm (CE)

2025Q3-Q4:

23360 cores; perfomance 427920.04 HEP-SPEC06; Average HEP-SPEC06 per Core = 18.32; CMS can take up all cores, NICA up to 4000 cores.

2025Q2:

Support for the RHEL7 OS distribution and clones — SL7, SLC7, Centos7 has ended. On most CICC computers (Tier 2, Tier 1) we are switching to the OS Alma Linux 9 . (This OS is almost a complete clone of RHEL9.)

2025Q1:

468 WNs; 20096 cores; perfomance 323820.54 HEP-SPEC06 : 84*433.15+14*610+80*1100+140*431.88+56*610+14*625.31+80*1093.98 average 16.11 HEP-SPEC06 per Core

Storage Systems (SE).

2025Q4:

EOS : 20869.64 TB MPD EOS : 7030.71 TB CMS @ dcache dsk: 15028.33 TB DTEAM @ dcache dsk: 3.22 TB OPS @ dcache dsk: 3.22 TB CMS @ dcache mss: 2284.52 TB DTEAM @ dcache mss: 1.93 TB OPS @ dcache mss: 3.22 TB Tapes @ Enstore: 57948,00 TB Tape Robot: 101.5PB, IBM TS3500(11.5PB) +IBM T4500(90PB) CVMFS: 2 squid serverscache CVMFS :140 TB

2025Q3:

EOS: 20445.18 TB MPD EOS: 7030.71 TB EOS MPD: 7030.71 TB CMS @ dcache dsk Total: 12299.02 TB 12299016.95 GB DTEAM @ dcache dsk Total: 3.87 TB 3865.39 GB OPS @ dcache dsk Total: 3.22 TB 3220.33 GB CMS @ dcache mss Total: 2289.26 TB 2289258.94 GB DTEAM @ dcache mss Total: 1.93 TB 1932.72 GB OPS @ dcache mss Total: 3.22 TB 3221.20 GB Tapes @ Enstore Total: 57948,00 TB CMS: 20744,00 TB Tape Robot: 101.5PB, IBM TS3500(11.5PB) +IBM T4500(90PB) CVMFS: 2 squid serverscache CVMFS :140 TB

2025Q1:

EOS 20013TB;

MPD EOS 7030.71TB;

cvmfs 140TB;

afs 12.5TB;

dcache 12450.84TB;

mss 2362.23TB;

Tapes@Enstore 35496,00 TB;

tape robot 91.5PB;

Robot TS3500 is served by CTA installation 11PB (no WLCG);

Robot TS4500 is served by Enstore and CTA installations together.

CVMFS: 2 squid serverscache CVMFS :140 TB

Data storage is provided for the dCache system, robotic tape storage using Enstore software and the EOS system.

Software :

2025Q3:

Scientific Linux 7.9 и Alma Linux 9.6 EOS 5.2.32 dCache 8.2, EOSCTA Enstore 6.3. SLURM 25.05.3 VOMs openafs cvmfs grid UMD4 + EPEL (текущая версия) UMD4/5 + EPEL (текущая версия) ARC-CE For NICA: FairSoft FairRoot MPDroot

====================================================================

====================================================================

====================================================================

=====================================================================

Infrastructure of the Tier1 (T1_RU_JINR) 2025

Infrastructure of the Tier1 (T1_RU_JINR) 2024

Infrastructure of the Tier1 (T1_RU_JINR) 2023

Infrastructure of the Tier1 (T1_RU_JINR) 2022

Infrastructure of the Tier1 (T1_RU_JINR) 2021

Infrastructure of the Tier1 (T1_RU_JINR) 2020

Infrastructure of the Tier1 (T1_RU_JINR) 2019

Infrastructure of the Tier1 (T1_RU_JINR) 2018

Infrastructure of the Tier1 (T1_RU_JINR) 2017

Infrastructure of the Tier1 (T1_RU_JINR) 2016